Amazon is spending millions on training an ambitious large language model in the hopes of competing with elite models like OpenAI and Alphabet, Reuters reported.

With two trillion parameters, the model, code-named “Olympus,” may rank among the largest models being trained. One of the greatest models currently available, OpenAI’s GPT-4 model, is said to have one trillion parameters.

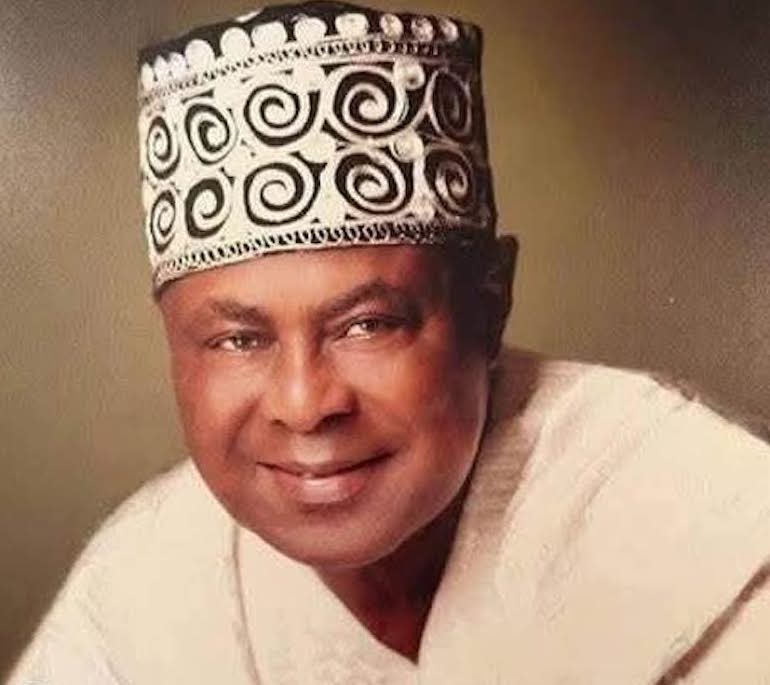

Former Alexa CEO Rohit Prasad, who now directly reports to CEO Andy Jassy, is leading the team. As Amazon’s senior scientist for artificial general intelligence, Prasad brought together scientists from the Amazon science team and those working on Alexa AI to work on training models, providing specialised resources to unify the company’s AI efforts.

Titan is one of the smaller models that Amazon has already trained. Additionally, it has collaborated with AI model startups, such as Anthropic and AI21 Labs, to make them available to customers of Amazon Web Services.

According to Reuters, Amazon thinks that having in-house models could increase the appeal of its products on AWS, as enterprise clients seek out high-performing models.

Considering the amount of processing power needed, training larger AI models is more expensive. During a call for results in April, officials from Amazon announced that the firm will be investing more in generative AI and LLMs while reducing its use of transportation and fulfilment in its retail division.